A Brief History in Code Portability

Do you know why you can take a Python program and run it on any computer that has a Python interpreter on it? Well, because the computer industry has made a huge amount of investment into providing portability over the last 70 years. In the beginning, computers didn’t have portable code – programmers had to learn to code and operate a single computer model, which was often a one of a kind. There is really no need for the concept of portability when you only have access to one computer. Even in the earliest days of computing, there were some basic concepts like registers, adding numbers together, etc – but that was about it. Last weekend, I had the epiphany, that most people don’t know (or have completely forgotten) this history and hence, don’t really understand why container portability is such a nuanced thing.

In the beginning, there were no general purpose computers, only calculating machines. Most calculating machines did one single task (algorithm) and were essentially hard coded, in hardware, at the time of construction. This was called construction because these things were made from metal, not transistors. Let that sink in.

It wasn’t until the 1840s that Charles Babbage and Ada Lovelace invented the idea of programming. He created the first mechanical, general purpose computer, which was called the analytical engine. She wrote software for it by putting code and data on punch cards. This was the first device that could be programmed and re-programmed with different algorithms. The algorithm wasn’t hard coded into the actual device. The difference engine was designed to be re-programmable. There was no portability, because there was really only one – kinda – it’s construction was never completed.

Next up, nearly a hundred years later, were the COLLOSUS, ENIAC, EDVAC and brethren. To program one of these vacuum tube computers, you had to learn to operate 1000s of switches and cables. You essentially had to be a computer operator and a computer programmer in one – kinda like cloud services – and learning how to program one of them did not mean you had any clue how to program one of the other ones. In fact, many early computers were developed in secret military projects, so portability was the last thing on the designers’ minds. These computers were programmed with a language level that we now call the Instruction Set Architecture (ISA) – if you have ever programmed in assembly code, think about converting that to the machine level without the help of an assembler – that’s how they rolled.

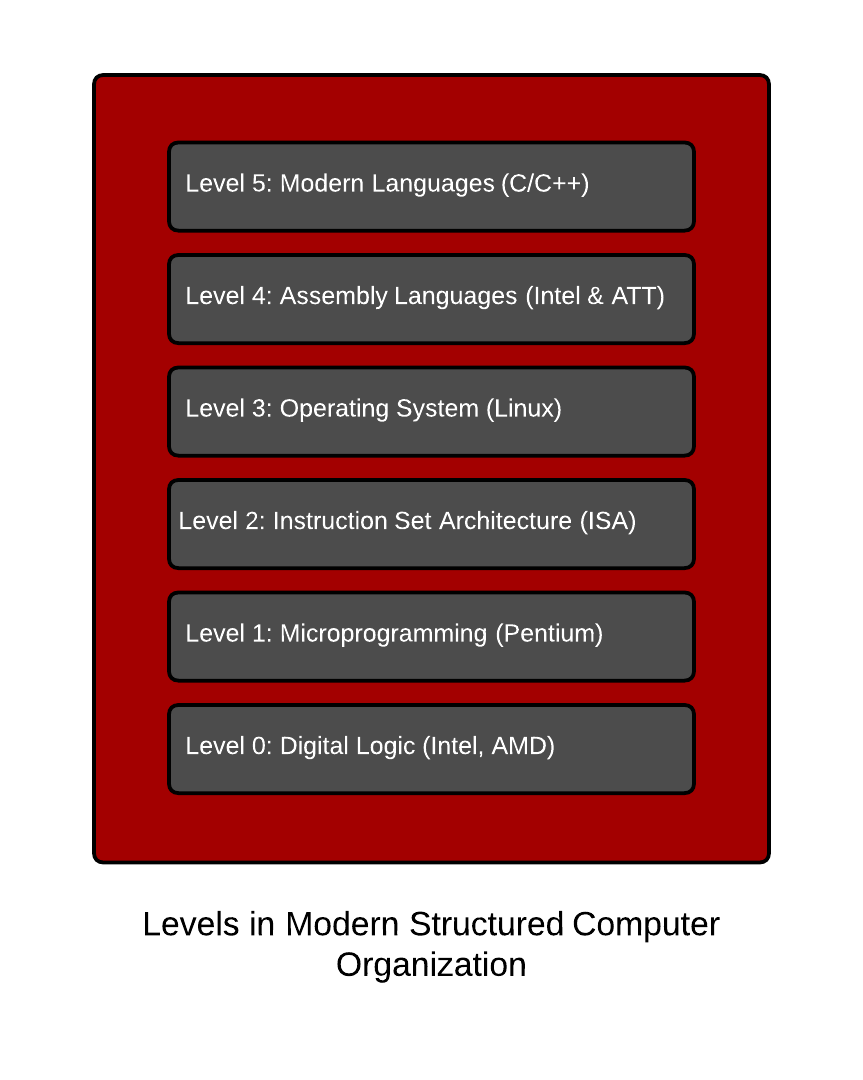

It wasn’t until some ridiculously smart cats like Von Neumann and Seymour Cray came onto the scene that we really started to see multi-level computing form. We started to develop higher level languages that humans could understand and lower level languages that computers could run quickly. We developed translation (ex. C compilers and x86 assemblers) and interpretation (e.x. Python). Skipping a ridiculous amount of interesting Computer Organization history, we ended up with the 6 common layers that we see in modern computing:

- Level 5: Modern Languages like C which are typically portable because of translation to level 3 via compilers.

- Level 4: Assembly Languages like x86 which are typically portable because of translation to level 3 via assemblers.

- Level 3: Operating System like Linux, which are partially interpreted. Think of ELF binaries which expect a certain format to run. These are typically x86 instructions with a format that Linux knows how to manage. File, socket, memory, and process management is all dynamic and interpreted.

- Level 2: Instruction Set Architecture (ISA) like x86, PowerPC, ARM or Power8. This layer is hard coded. Both Intel and AMD have a nearly identical ISA, but the microarchitecture below it is widely different. Also, remember PowerMacs had two of these – one for Intel and one for powerpc. Also remember that Transmeta (Linus Torvalds worked there) did interpretation at this level.

- Level 1: Microarchitecture like what’s inside an intel chip. Most programmers have never touched this and only know it from a message that loads when Linux boots. This layer is actually interpreted which might make your brain explode.

- Level 0: Digital Logic like gates, ands, nands, nors, etc. Each CPU obviously has it’s own implementation of this. The level below this requires knowledge of electrical engineering, which I don’t have 🙂

OK, so at this point you are saying to yourself – cool, but why am I learning this stuff? Well, because you need to know this background to understand Container Portability: Part 2: Code Portability Today,where we explore how containers is really about breaking the operating system (level 3) up into two pieces and managing them separately.

12 comments on “Container Portability: Part 1”