Code Portability Today

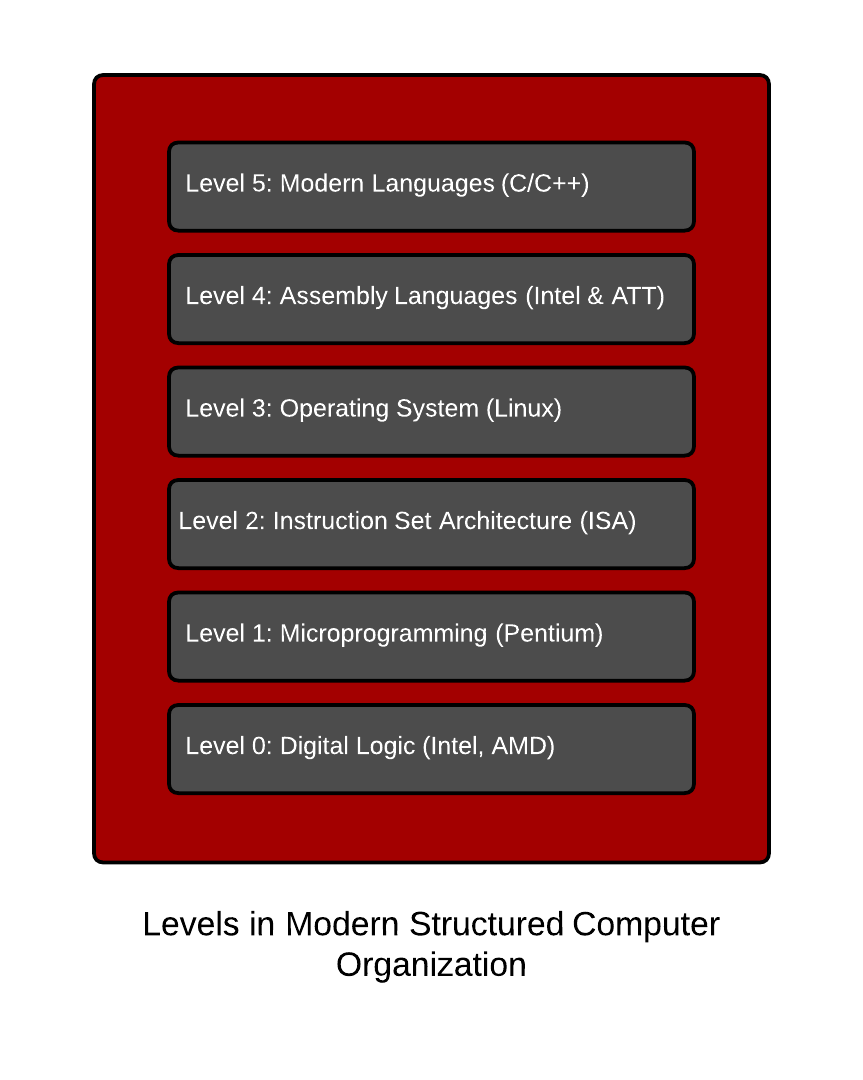

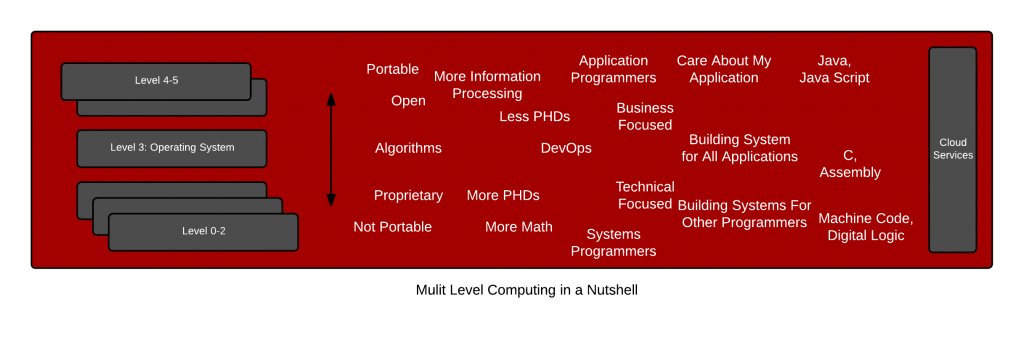

In Container Portability: Part 1: A Brief History in Code Portability, we explored the genesis of code portability and visited structured computer organization to highlight the six commonly found levels in modern computing. Revisiting the six layers – nobody debates the portability of the upper two layers – Application Programmers know that C code, assembly, and all of the interpreted languages based on those two is generally portable – if it is retranslated (compiled, assembled) on hardware platform. Nor does anybody debate the importability of the lower three layers – System Programmers understand that x86_64 code can generally only be ran on x86_64 processors. People really don’t debate this too much.

However, we have all kinds of misunderstandings at level 3 because operating systems are not well not understood even though we use them every single day – even though we have wide ranges of skills with DevOps as a culture and Full Stack Developers. We have forgotten how operating systems work, at their core, because they mostly, just work. But, we need to understand that operating systems extend the level 2 instruction set for convenience – things like loading programs to and from disk, role based access controls, memory management, etc. Operating systems live in this no man’s land between hardware and application software. With containers becoming so popular, we really need to invest in understanding the operating system again. It’s not just for nerdy systems programmers (old school dudes with giant beards) anymore. Below is a lay of the land that demonstrates how we have a blind spot in how computers work – the old school, full stack developers are missing.

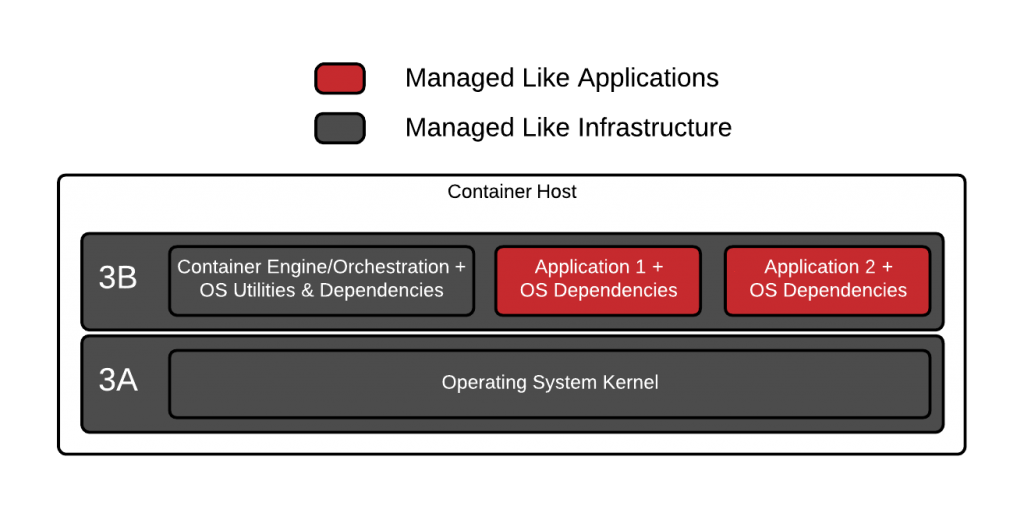

Let’s dig deeper and understand why people are so confused. With docker and Linux containers, we are basically breaking level 3 (the operating system) into two parts – let’s call them 3A which will represent the kernel and 3B which will represent the user space. The container host has one copy of 3A which is shared between all of the containers, daemons and utilities that run on it. This single kernel which runs on the container host, allows the system to boot, enables the hardware, and provides a place for all of the containers to run (process management). The container host and every container image on it, has it’s own copy of 3B. These container images can be copies of content from any mix of Fedora, Ubuntu, Debian, Gentoo, Alpine, CentOS, RHEL, or any other Linux distribution. They can even be custom built images that have their own version of Glibc and other libraries built from scratch. There is nothing in the Linux operating system or container tooling that forces users to match the container images with the container host, and this can lead to both spatial and temporal problems.

Even among Linux distributions built from very similar versions of the kernel, glibc, crypto libraries, etc, there can be incompatibilities in the way they were built or configured (spatial problems). Two Fedora boxes configured completely differently, might not work as expected. For example, I once moved a container internals lab I was working on from a container hosts configured with Device Mapper to ones configured with Overlay2 and all kinds of things broke inside the containers, heck even yum didn’t work right. These types of problems are compounded over time (temporal problems). Imagine taking an Ubuntu 17 image and running it on a Fedora 7 container host? Putting aside the fact that Fedora 7 was released long before Docker – would this work?

How do we build a test matrix to record and codify what will and won’t work? Now imagine a day in the not too distant future where the versions are Ubuntu 31 and Fedora 57. What combination of versions will work together, who is testing the millions of permutations? Think about performance regressions, security regressions, and just plain architectural problems because implementations in the kernel and user space have changed over time. There is no cross Linux distribution API that defines the entire interface between 3A and 3B – no versions – it was always just assumed that you would build and run Fedora 25 binaries on Fedora 25 and Ubuntu 17 binaries on Ubuntu 17.

The distribution type and version, were “a thing” and that made it easy. Fedora binaries are built with a fedora compiler, with the build flags that make sense in a given version of Fedora. Same with Debian, Ubuntu, and any other distribution of Linux. In fact, hardcore Gentoo users (I must confess that I did this) were so obsessed with compatibility that they went as far as compiling the kernel, the compiler and all of the binaries on the exact hardware that they were using. This was done to eek out every last bit of performance – this was called a stage 1 installation. Mega compatibility.

Since there is no fully defined, cross distribution API definition between levels 3A and 3B, we rely on the Linux system call interface. The Linux system call interface is very stable which has tricked us into believing that *this* is enough. That’s because a simple web server doesn’t need much from the kernel – basically just system calls to open files and open sockets. But, as containers have become more and more popular, people are trying to tackle more and more workloads with them. As containerized workloads expand beyond simple web servers, compatibility becomes more and more of a problem. As I described here, there can and will be all kinds of changes over time – and with the consumption of more and more specific hardware like FPGAs on Amazon AWS or Solarflare hardware in High Performance Computing (HPC) environments as well as architectural changes to different Linux distributions (how many times has /dev and /proc changed?), things will get more difficult.

I would not advocate for the Gentoo Stage 1 level of compatibility, but I would warn people that just because containers make it so easy for to move binaries between distributions doesn’t mean you should. People are experimenting, and heck it works most of the time, but it’s just that – an experiment. So, what’s the solution? Well, there are several different adventures to choose from, which we will explore in Container Portability: Part 3: The Paths Forward.

2 comments on “Container Portability: Part 2”