Background

Yesterday, I noticed this interesting tidbit from Rackspace calculating the cost of data over the last Decade of Storage. Of course, there a few bumps in the road that made me chuckle. Interestingly, in the last couple of years it plots the cost from $0.40/GB to $0.06/GB. This ties together a whole bunch of things that I have thought about over the last couple of years. First, now is a wonderful time to be a user buying storage for personal audio and video. Second, regular people are going to have to start to learn data management strategies. Finally, this cost isn’t even close to what it is for me in my data center. It is easy for us to celebrate the cheap cost of raw storage while loosing track of the total cost of ownership for data. I will elaborate.

Security and convenience add so much to the cost of data. For a traditional user, it is so easy to go buy a 2GB or 3GB disk and throw a bunch of video on it. The problem comes when you want to back it up. If you want a single backup, you will need to double the space. If you want pre/post production copies, quadruple it. We are now at $0.24/GB, not counting all of the organization time. This is a common scenario for hobbyist photographers or videographers.

Off site backup’s at ZumoDrive or DropBox, at the time of this writing, will cost an additional $0.50/GB. Even at at JungleDisk, it will cost you an extra $0.15/GB. All of this supposes you even have a fast enough Internet connection to get a TB of data off site. I suspect most of us our doing some inconvenient tiered approach to backup. For an average user, this is a pain.

From my perspective, cost of storage at the data center is contributing to this problem. Three years ago, our cost was nearly $1/GB/mo cost. Just playing with the numbers, if I were to buy a brand new solution today, I could get the cost down to about $0.12/GB/mo. What a change in just 3 years.

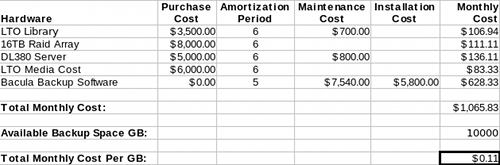

The Numbers

I just wanted to quickly display the numbers, so that fellow systems administrators could take a peak. I calculate the numbers with a 6 year amortization, which means I consider the hardware dead after that. These numbers can get really hairy because sometimes you replace pieces parts of the environment over the 6 year schedule. Also, notice, that since we are using Bacula, which is open source, there is not cost associated with purchase. There is still a huge cost in installation and maintenance. This is where Microsoft makes it’s attacks on Open Source. It works for us, but not everyone decides to do business in this way, just don’t underestimate the cost because of emotion.

Generally, this means it is never better than an estimate, which makes these schedules useful for determining where to strategically upgrade. This can cut cost, but one must also be careful to calculate migration costs carefully and accurately. Think through each type of data you have, what migration will involve and estimate the time.

Key Elements

- Purchase Cost: Obviously how much it cost to purchase the necessary hardware. Be careful when calculating media costs for Tape drives, I have found I usually underestimate this cost

- Amortization Period: This is calculated different for taxes, but for our purposes, we are trying to calculate how long the hardware will be in a production environment

- Maintenance Cost: This is the cost to maintain per year. This is probably one of the most underestimated costs. We use good hardware with contracts in place, but if you skimp and buy a cheap raid array, this could burn you

- Installation Cost: This is basically how many hours of sysadmin time it will take to install and migrate. This can be wildly underestimated if not done carefully.

- Total Monthly Cost: This is the total monthly cost for the environment

- Available Backup Space: This is the total number of usable GB provided by the environment

- Total Monthly Cost per GB: This is the number everyone loves to talk about

Conclusion

Calculating the true cost of storage is tough business when the price is sliding all of the time. It can be similar to shorting stock. Normally, this problem is probably left to the CTO, but I figured it couldn’t hurt letting other sys admins in on it a bit of street MBA knowledge.

One comment on “Decade of Storage: Analysis of Data Costs”