The Container Host *is* the Container Engine, and Container Image Compatibility Matters

Have you ever wondered, how are containers are so portable? How it’s possible to run Ubuntu containers on CentOS, or Fedora containers on CoreOS? How is it that all of this just magically works? As long as I run the docker daemon on all of my hosts, everything will just work right? The answer is….no. I am here to break it to you – it’s not magic. I have said it before, and I will say it again, containers are just fancy Linux processes. There is not even a container object in the Linux kernel, there never has been. So, what does all of this mean?

Well, it means two very important things. First, the work of organizing and creating a container is done in user space. Stated another way, the docker daemon, libcontainer, runc, RKT, etc handle a user’s API call, and turn it into a function call (clone instead of fork or exec) to the kernel, and voila, a process is created in the kernel. Second, there is no layer of abstraction, like virtualization. That means x86_64 containers must run on x86_64 hosts – ARM containers must run on ARM hosts – Microsoft Windows containers must run on Microsoft Windows hosts. The docker daemon provides no compatibility guarantees – any incidental compatibility is provided by the Linux kernel and Glibc. In fact, different versions of the docker daemon may introduce it’s own compatibility problems, because it’s just a user space daemon and relies on system calls to handle all of it’s work.

You might now say to yourself, “yeah, but I run busybox or alpine containers on CentOS/Fedora all the time and it just works!!!!” That’s true, it does work most of the time, but I would like to highlight some questions I have ran into over the last 4-ish years, working with docker, and talking to literally thousands of people about the problems they see:

- What happens when your container image expects to find a file in /proc, or /dev?

- What happens if the container image is vastly older than the container host, say 1, 3, 5, or even 10 years?

- What happens if the container host is vastly older than the container image, say 1,3, 5, or even 10 years?

- Which distributions of Linux work together and who is testing this?

- Would you run binaries from different Linux distributions in production?

- Who fixes the kernel, container engine, or container image bugs and tests for regressions?

- What happens if your container expects a certain kernel module to be loaded and running?

- How do we detect performance regressions in the kernel or glibc?

- What happens if the docker daemon triggers a kernel bug when it is trying to create a container on a version of a kernel for which it was not tested or certified to work with?

- What happens if your container does more than just file open(), say it needs access to dedicated hardware? or syscalls that trigger special hardware?

- What happens if the glibc in your application, inside the container doesn’t use hardware accelerated routines because the underlying kernel didn’t enable it? (yes, this can happen)

- etc, etc, etc security, performance, architectural changes over time….

Well, do you have an answer for each of the above questions? Can you say to yourself, honestly, “yeah, I can fix that no problem?” I have seen all of the above happen, and this is just the beginning. We are still in early days and haven’t ran into even a small percentage of the problems that we will as this technology ages – when we are using kernels, container engines, and images which are of vastly different ages from built by Linux distributions which take wildly different approaches to building kernels and binaries, we will see more and more problems. As workloads expand beyond simple web servers which really only need to use system calls to open files and open TCP sockets, we will see more and more problems. On top of this, magnify this problem over time, as all of these components age (not so gracefully).

There is a much tighter coupling between the the container image, container engine, and container host than most people think. It would seem, that even Docker agrees, as they developed the Moby project and LinuxKit to be able to tightly couple these three things (host, engine, image) in what amounts to essentially a Unikernel like structure. Tons of technical people are out there showing demos of interoperability that doesn’t explain the whole story, and in fact leads people down a really bad path.

The crotchety old systems administrator in me says – kids these days don’t understand the user space and kernel split. They don’t understand the Unix design principles. I think Dan Woods is right, there is a coming reliability crisis. If you have ever done a Gentoo Stage 1 install, then you know exactly why you run binaries that are designed for and built with the same kernel they run on. Save yourself the pain, if you are an Ubuntu 16 shop, run the docker engine that comes with Ubuntu 16, and run Ubuntu 16 container images. If you are a Fedora Server 25 shop, run the container engine that comes with Fedora Server 25, and run Fedora Server 25 images. If you are a RHEL 7.3 shop, do the same thing. Don’t mix and match distributions and version – it’s crazy talk. It’s fun to experiment and see what will work, but don’t deploy production applications this way.

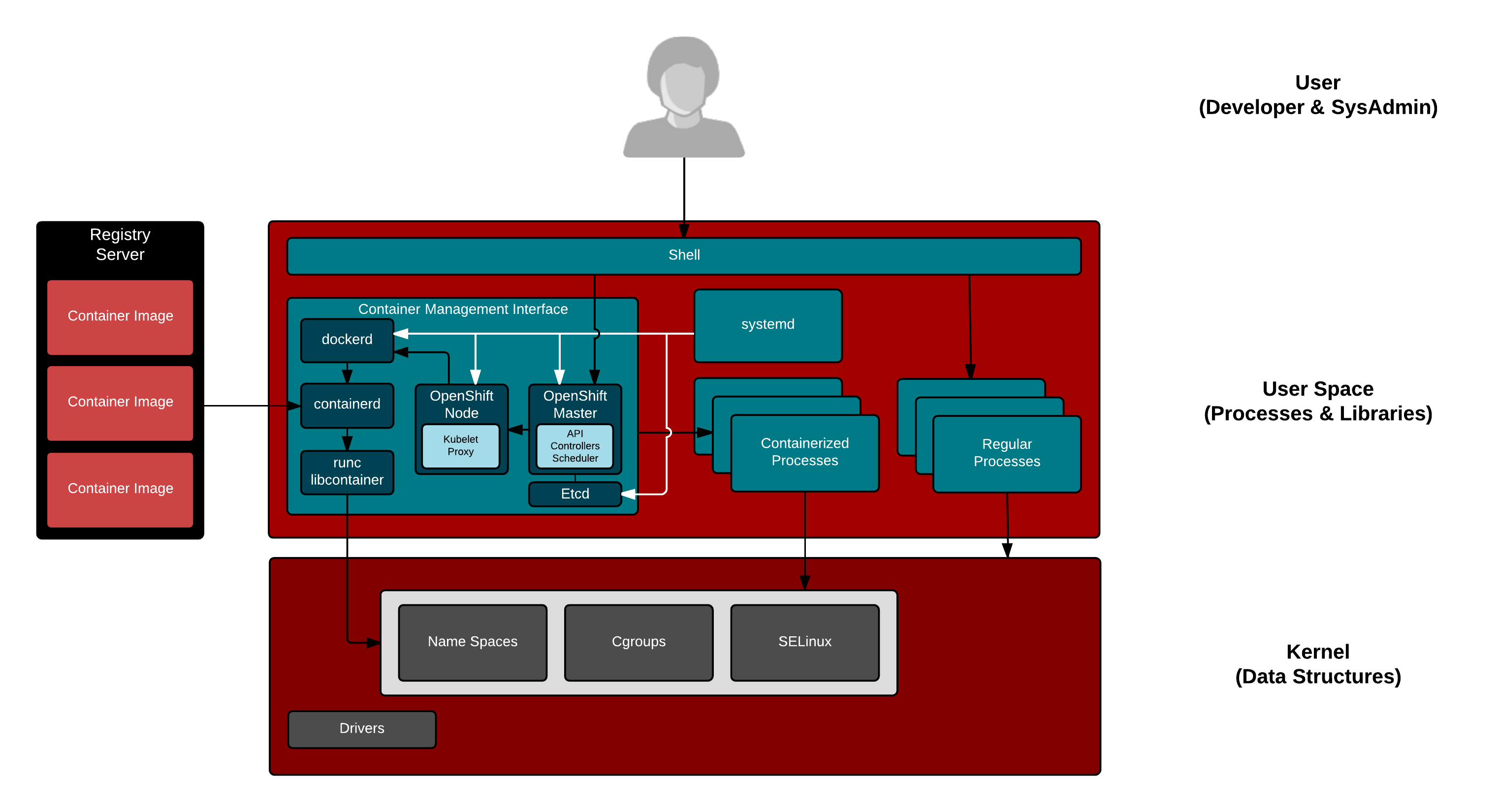

The above graphic shows all of the moving parts in a typical Kubernetes environment. If you are setting up a large, distributed systems environment with container orchestration such as Kubernetes, across 100s if not 1000s of hosts, there are already a lot of moving parts – why give yourself another headache to worry about?

6 comments on “Why Portability is Not the Same Thing as Compatibility”