Background

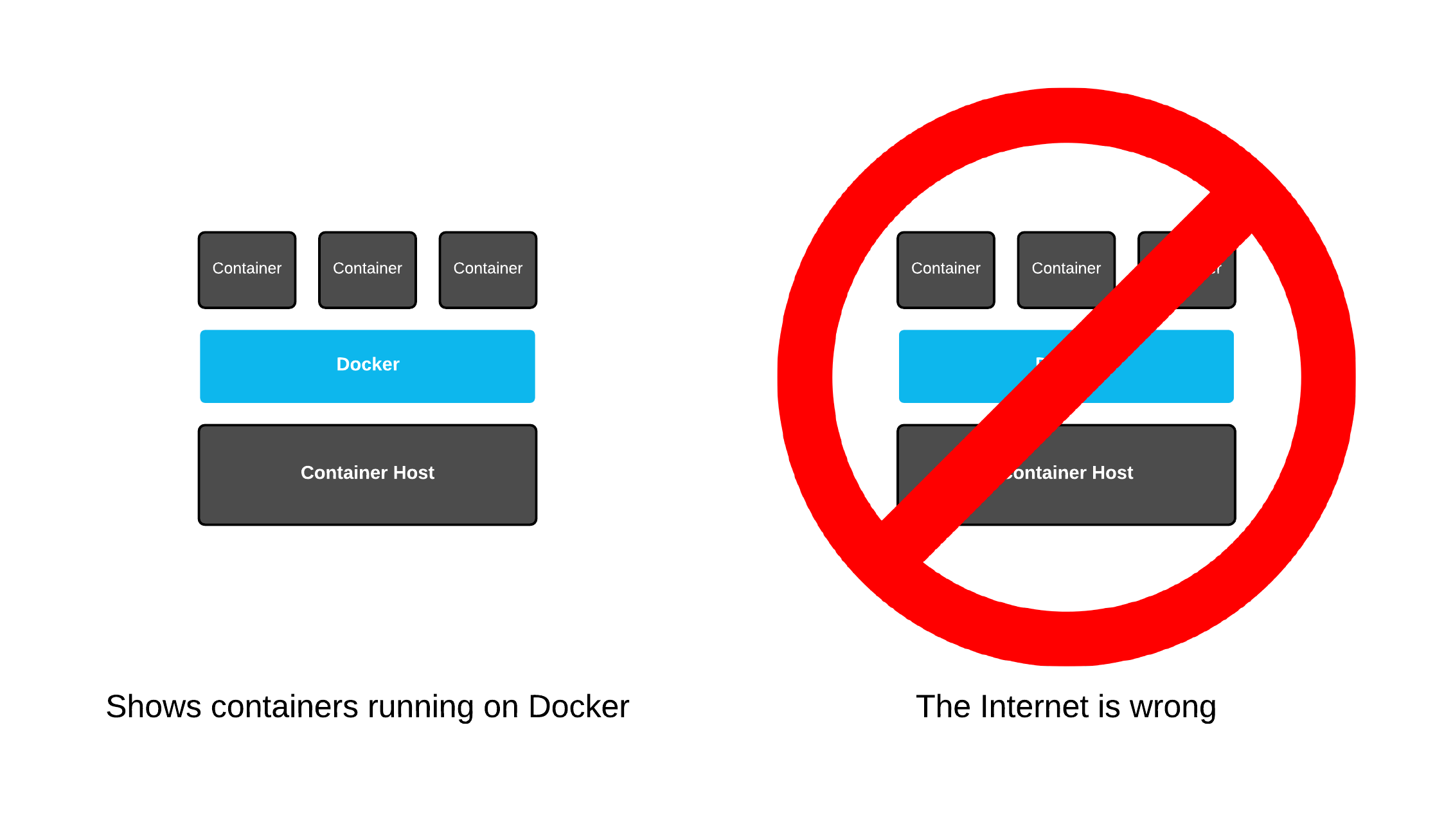

I’m here to tell you that somebody on the Internet is wrong! Actually, many people. If you have ever consulted Google for the words “Docker Architecture” you may have found a drawing that implies that Docker is some sort of blue box which sits on top of an operating system and runs containers. That makes sense right? Wrong!

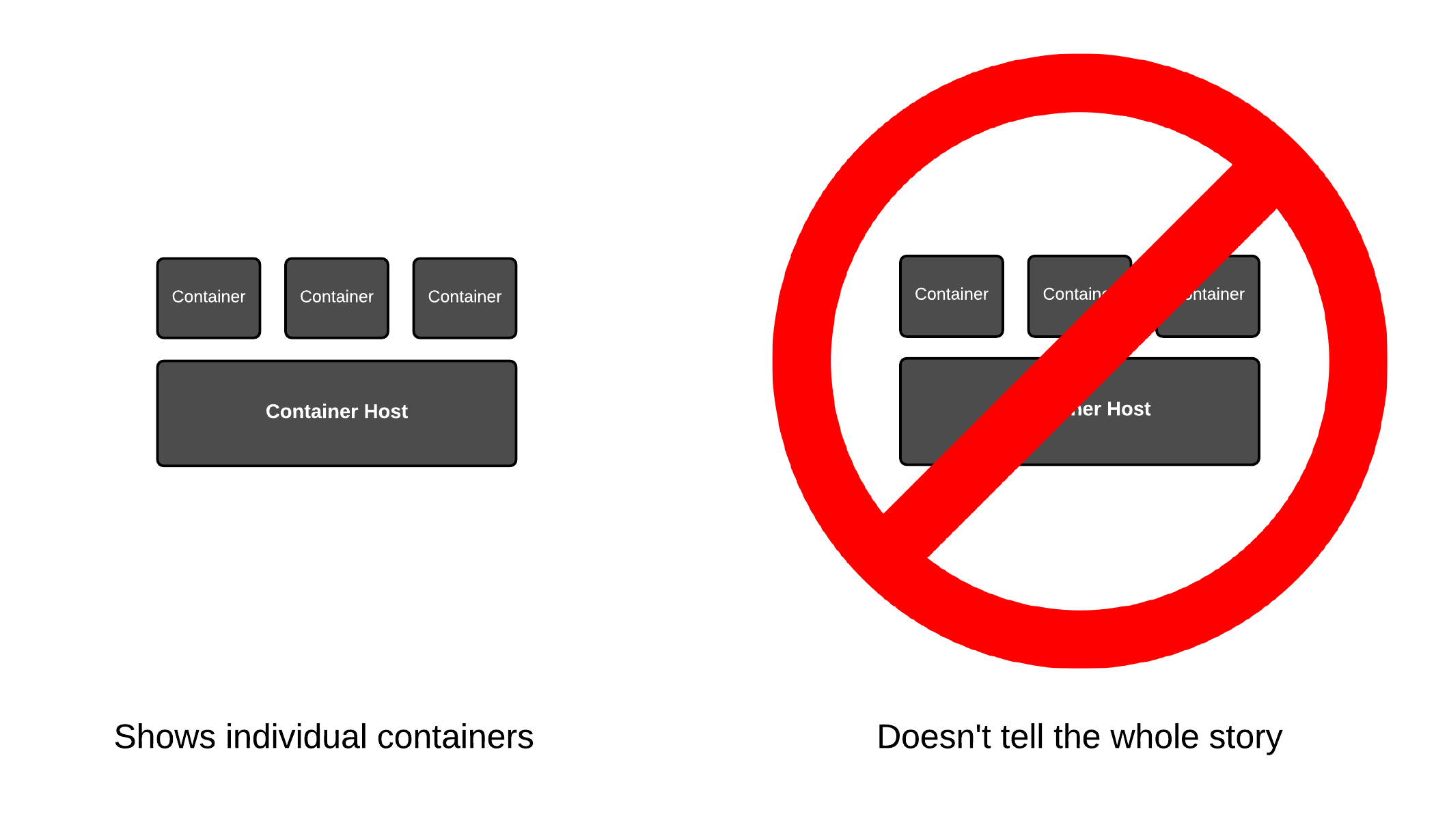

Containers don’t run on Docker – for that matter, they don’t run on Kubernetes either – they run just like regular programs – on an operating system. But, there’s another common problem going too far the other way. Many drawings only show containers running on an operating system with no sign of Docker. That doesn’t tell the whole story either.

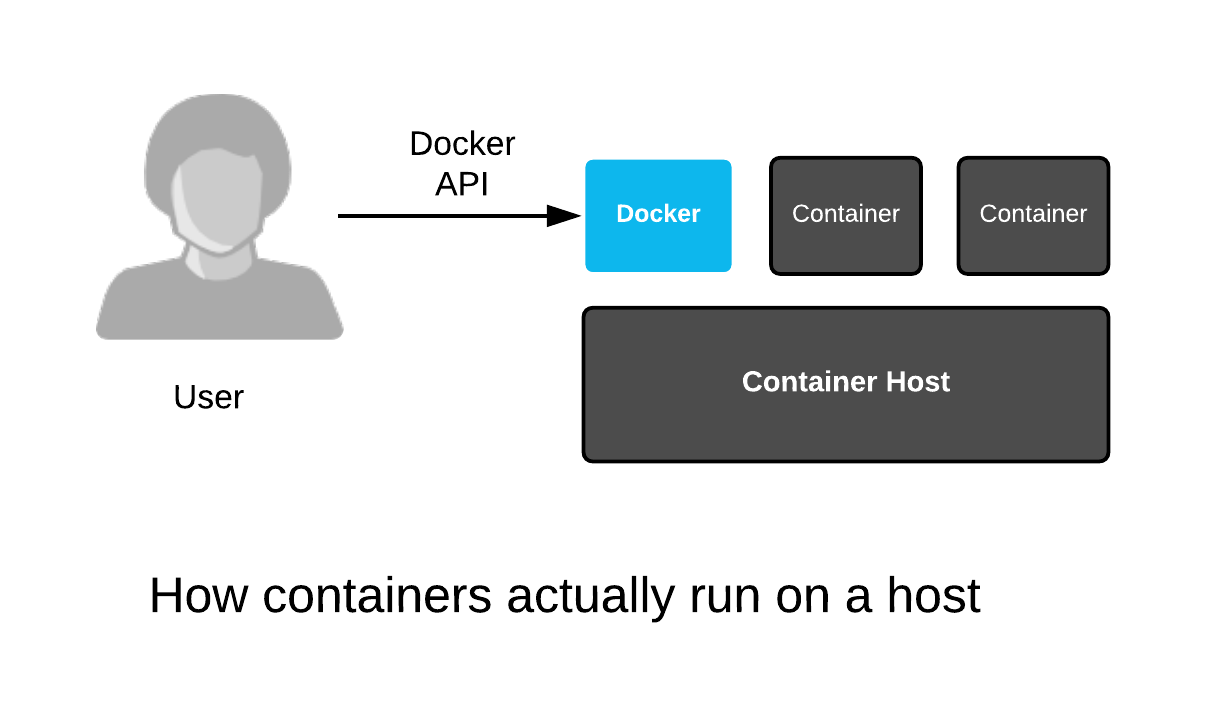

Let’s clarify what we are talking about when we say the word Docker. Docker is a company, but it’s also shorthand for an API, as well as a program that helps run containers. Most of the time, when architects refer to Docker, they mean the program, specifically a type of program called a Container Engine. Container engines run as a privileged program on an operating system and make it easy to run containers. Docker gained a lot of traction being the first container engine in town, but now days there are others like CRI-O and RKT which do all kinds of cool things.

This new type of program, called a container engine, changed how containers were consumed. Historically, containers like Solaris Zones, or LXC were treated just like virtual machines and required the installation of an operating system in the container using the normal installation method. Think clicking next, next, next in an installer. With the modern era of container engines, things got a lot easier and more automated.

Container engines do two major things. First, they provide an API for starting stopping and caching containers locally. Second, remove the need to install an operating system, instead consuming programs and libraries through Container Images like OCI.

But, containers don’t run on a container engine, they run on a Linux kernel (or in Windows). The container engine provides an API layer which users and other programs can interact with to tell the Linux kernel when and how to start containers. That’s the magic – a simple API and container images.

That’s also a container engine’s curse. A container engine makes it easy to run containers on a single host, but to run containers on multiple hosts, which most people want to, do in production, one needs to delve into the world of Container Orchestration.

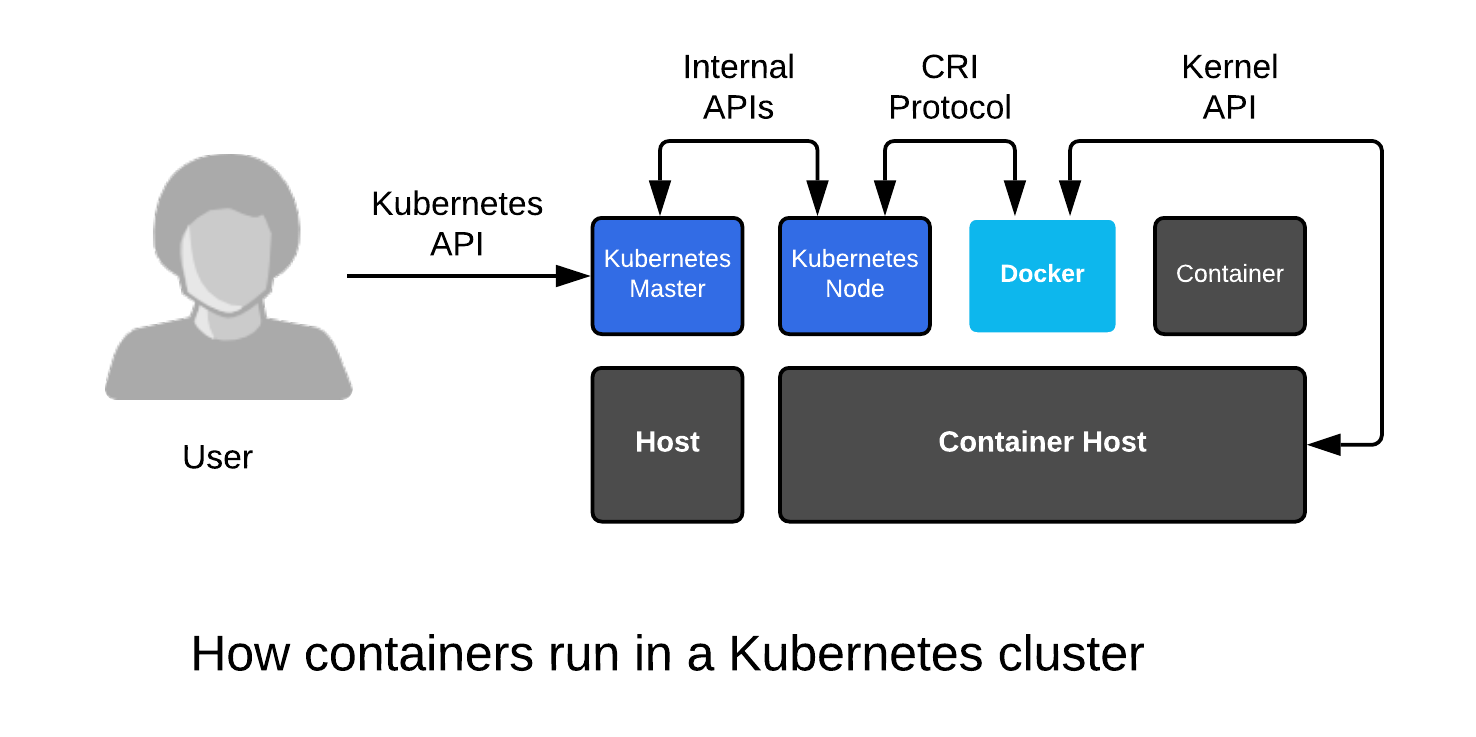

Kubernetes is the most popular container orchestrator, and it also provides an API for starting, stopping and scheduling containers, but across an entire cluster. It also adds the ability to model an entire universe of IT functions into the environment. Ranging from how many replicas of a container to run, to how and where to expose a service to the outside world, but that will have to wait until a future article.

For now, understand that containers are fundamentally part of the API economy. The Linux Kernel, Docker, and Kubernetes all have their own APIs (and lower level protocols like CRI) and each builds on the last. When a user interacts with a cluster, they will typically interact with the Kubernetes API, but when things go wrong in a distributed systems environment, they will often use the lower level APIs to troubleshoot what’s going on.

Containers don’t run “on” Docker – Docker helps run containers in an API supply chain. In fact, in the future it’s hard to say if Docker will even help run containers. There’s a lot of competition for a container engine which is dedicated to Kubernetes – contianerd and CRI-O seem to be gaining ground on the Docker Engine for the future within Kubernetes clusters because they simplify the API interactions and reduce complexity.

The containers API rabbit hole actually goes much, much deeper and if you would like to discuss further, feel free to follow me on Twitter @fatherlinux.

I don’t see any container runtime in your drawings. Can you explain?

So, a container runtime, is really a component of a container engine. It’s the piece that talks to the kernel to fire up containers. Runc is a perfect example of a container runtime. The problem is, in conversation, we use the words container runtime and container engine interchangeably, much like we do with Container Image and Repositories. I have seen this create confusion when discussing things on mailing lists, in engineering meetings, online, even in conversations one-on-one with people.

The problem is, Docker was genius, but to an extent luck as well. The concept of burying all of this functionality in a single deamon made it super addictive to use, but hard to understand how it really works under the covers. It’s like an iceberg, it’s beautiful until you hit what’s under water.

I wrote this article, with collaboration from a LOT of people to try and help others clarify their terminology use:

https://developers.redhat.com/blog/2018/02/22/container-terminology-practical-introduction/#h.6yt1ex5wfo55

Yep, I was making the exact point as the title in a recent internal presentation. In particular, the container infrastructure supervises containers, as in setup/start/list/stop, but once a container is started it is a regular Linux process.

One misleading visual representation is the Docker whale carrying containers on its back.

More accurate would be: containers laying on the ocean floor (solid foundation) and the Docker whale hovering above, taking care and doing chores.

I couldn’t agree with you more. If you ever need material to explain exactly how all of the set up works, feel free to leverage these labs and presentation:

https://learn.openshift.com/subsystems/

https://docs.google.com/presentation/d/1S-JqLQ4jatHwEBRUQRiA5WOuCwpTUnxl2d1qRUoTz5g/edit#slide=id.gb6f3e2d2d_2_213

Contributions, critiques, comments always welcome!